Coupon code and discount offers check now and use to save money & share google social blog : online event work health learn advices , new articles services shopping items ebay amazon etsy shopify facebook twitter linkedin instagram groups we are searching internet and post useful words for friends , earn ebook programing soft ware computer car automotive usa canada eu uk Au and international to be world useful blogs magazine news text and graphics friendly with more thanks

Wednesday, December 27, 2023

Tuesday, December 26, 2023

Partial differential equations

Ordinary differential equations

An ordinary differential equation (ODE) is an equation containing an unknown function of one real or complex variable x, its derivatives, and some given functions of x. The unknown function is generally represented by a variable (often denoted y), which, therefore, depends on x. Thus x is often called the independent variable of the equation. The term "ordinary" is used in contrast with the term partial differential equation, which may be with respect to more than one independent variable.

Linear differential equations are the differential equations that are linear in the unknown function and its derivatives. Their theory is well developed, and in many cases one may express their solutions in terms of integrals.

Most ODEs that are encountered in physics are linear. Therefore, most special functions may be defined as solutions of linear differential equations (see Holonomic function).

As, in general, the solutions of a differential equation cannot be expressed by a closed-form expression, numerical methods are commonly used for solving differential equations on a computer.

Partial differential equations

A partial differential equation (PDE) is a differential equation that contains unknown multivariable functions and their partial derivatives. (This is in contrast to ordinary differential equations, which deal with functions of a single variable and their derivatives.) PDEs are used to formulate problems involving functions of several variables, and are either solved in closed form, or used to create a relevant computer model.

PDEs can be used to describe a wide variety of phenomena in nature such as sound, heat, electrostatics, electrodynamics, fluid flow, elasticity, or quantum mechanics. These seemingly distinct physical phenomena can be formalized similarly in terms of PDEs. Just as ordinary differential equations often model one-dimensional dynamical systems, partial differential equations often model multidimensional systems. Stochastic partial differential equations generalize partial differential equations for modeling randomness.

Non-linear differential equations

A non-linear differential equation is a differential equation that is not a linear equation in the unknown function and its derivatives (the linearity or non-linearity in the arguments of the function are not considered here). There are very few methods of solving nonlinear differential equations exactly; those that are known typically depend on the equation having particular symmetries. Nonlinear differential equations can exhibit very complicated behaviour over extended time intervals, characteristic of chaos. Even the fundamental questions of existence, uniqueness, and extendability of solutions for nonlinear differential equations, and well-posedness of initial and boundary value problems for nonlinear PDEs are hard problems and their resolution in special cases is considered to be a significant advance in the mathematical theory (cf. Navier–Stokes existence and smoothness). However, if the differential equation is a correctly formulated representation of a meaningful physical process, then one expects it to have a solution.[11]

Linear differential equations frequently appear as approximations to nonlinear equations. These approximations are only valid under restricted conditions. For example, the harmonic oscillator equation is an approximation to the nonlinear pendulum equation that is valid for small amplitude oscillations.

Equation order and degree

The order of the differential equation is the highest order of derivative of the unknown function that appears in the differential equation. For example, an equation containing only first-order derivatives is a first-order differential equation, an equation containing the second-order derivative is a second-order differential equation, and so on.[12][13]

When it is written as a polynomial equation in the unknown function and its derivatives, its degree of the differential equation is, depending on the context, the polynomial degree in the highest derivative of the unknown function,[14] or its total degree in the unknown function and its derivatives. In particular, a linear differential equation has degree one for both meanings, but the non-linear differential equation

Differential equations that describe natural phenomena almost always have only first and second order derivatives in them, but there are some exceptions, such as the thin-film equation, which is a fourth order partial differential equation.

Examples

In the first group of examples u is an unknown function of x, and c and ω are constants that are supposed to be known. Two broad classifications of both ordinary and partial differential equations consist of distinguishing between linear and nonlinear differential equations, and between homogeneous differential equations and heterogeneous ones.

- Heterogeneous first-order linear constant coefficient ordinary differential equation:

- Homogeneous second-order linear ordinary differential equation:

- Homogeneous second-order linear constant coefficient ordinary differential equation describing the harmonic oscillator:

- Heterogeneous first-order nonlinear ordinary differential equation:

- Second-order nonlinear (due to sine function) ordinary differential equation describing the motion of a pendulum of length L:

In the next group of examples, the unknown function u depends on two variables x and t or x and y.

- Homogeneous first-order linear partial differential equation:

- Homogeneous second-order linear constant coefficient partial differential equation of elliptic type, the Laplace equation:

- Homogeneous third-order non-linear partial differential equation, the KdV equation:

Existence of solutions

Solving differential equations is not like solving algebraic equations. Not only are their solutions often unclear, but whether solutions are unique or exist at all are also notable subjects of interest.

For first order initial value problems, the Peano existence theorem gives one set of circumstances in which a solution exists. Given any point

![{\displaystyle Z=[l,m]\times [n,p]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/515b71ad3b5fc01ff532df8cb71018baca811973)

However, this only helps us with first order initial value problems. Suppose we had a linear initial value problem of the nth order:

such that

For any nonzero

Related concepts

- A delay differential equation (DDE) is an equation for a function of a single variable, usually called time, in which the derivative of the function at a certain time is given in terms of the values of the function at earlier times.

- Integral equations may be viewed as the analog to differential equations where instead of the equation involving derivatives, the equation contains integrals.[16]

- An integro-differential equation (IDE) is an equation that combines aspects of a differential equation and an integral equation.

- A stochastic differential equation (SDE) is an equation in which the unknown quantity is a stochastic process and the equation involves some known stochastic processes, for example, the Wiener process in the case of diffusion equations.

- A stochastic partial differential equation (SPDE) is an equation that generalizes SDEs to include space-time noise processes, with applications in quantum field theory and statistical mechanics.

- An ultrametric pseudo-differential equation is an equation which contains p-adic numbers in an ultrametric space. Mathematical models that involve ultrametric pseudo-differential equations use pseudo-differential operators instead of differential operators.

- A differential algebraic equation (DAE) is a differential equation comprising differential and algebraic terms, given in implicit form.

Connection to difference equations

The theory of differential equations is closely related to the theory of difference equations, in which the coordinates assume only discrete values, and the relationship involves values of the unknown function or functions and values at nearby coordinates. Many methods to compute numerical solutions of differential equations or study the properties of differential equations involve the approximation of the solution of a differential equation by the solution of a corresponding difference equation.

Applications

The study of differential equations is a wide field in pure and applied mathematics, physics, and engineering. All of these disciplines are concerned with the properties of differential equations of various types. Pure mathematics focuses on the existence and uniqueness of solutions, while applied mathematics emphasizes the rigorous justification of the methods for approximating solutions. Differential equations play an important role in modeling virtually every physical, technical, or biological process, from celestial motion, to bridge design, to interactions between neurons. Differential equations such as those used to solve real-life problems may not necessarily be directly solvable, i.e. do not have closed form solutions. Instead, solutions can be approximated using numerical methods.

Many fundamental laws of physics and chemistry can be formulated as differential equations. In biology and economics, differential equations are used to model the behavior of complex systems. The mathematical theory of differential equations first developed together with the sciences where the equations had originated and where the results found application. However, diverse problems, sometimes originating in quite distinct scientific fields, may give rise to identical differential equations. Whenever this happens, mathematical theory behind the equations can be viewed as a unifying principle behind diverse phenomena. As an example, consider the propagation of light and sound in the atmosphere, and of waves on the surface of a pond. All of them may be described by the same second-order partial differential equation, the wave equation, which allows us to think of light and sound as forms of waves, much like familiar waves in the water. Conduction of heat, the theory of which was developed by Joseph Fourier, is governed by another second-order partial differential equation, the heat equation. It turns out that many diffusion processes, while seemingly different, are described by the same equation; the Black–Scholes equation in finance is, for instance, related to the heat equation.

The number of differential equations that have received a name, in various scientific areas is a witness of the importance of the topic. See List of named differential equations.

Differential equation

From Wikipedia, the free encyclopedia

| Differential equations |

|---|

| Scope |

Fields |

| Classification |

|---|

Types |

Relation to processes |

| Solution |

|---|

Existence and uniqueness |

General topics |

Solution methods |

| People |

|---|

List |

In mathematics, a differential equation is an equation that relates one or more unknown functions and their derivatives.[1] In applications, the functions generally represent physical quantities, the derivatives represent their rates of change, and the differential equation defines a relationship between the two. Such relations are common; therefore, differential equations play a prominent role in many disciplines including engineering, physics, economics, and biology.

The study of differential equations consists mainly of the study of their solutions (the set of functions that satisfy each equation), and of the properties of their solutions. Only the simplest differential equations are soluble by explicit formulas; however, many properties of solutions of a given differential equation may be determined without computing them exactly.

Often when a closed-form expression for the solutions is not available, solutions may be approximated numerically using computers. The theory of dynamical systems puts emphasis on qualitative analysis of systems described by differential equations, while many numerical methods have been developed to determine solutions with a given degree of accuracy.

History

Differential equations came into existence with the invention of calculus by Newton and Leibniz. In Chapter 2 of his 1671 work Methodus fluxionum et Serierum Infinitarum,[2] Isaac Newton listed three kinds of differential equations:

In all these cases, y is an unknown function of x (or of x1 and x2), and f is a given function.

He solves these examples and others using infinite series and discusses the non-uniqueness of solutions.

Jacob Bernoulli proposed the Bernoulli differential equation in 1695.[3] This is an ordinary differential equation of the form

for which the following year Leibniz obtained solutions by simplifying it.[4]

Historically, the problem of a vibrating string such as that of a musical instrument was studied by Jean le Rond d'Alembert, Leonhard Euler, Daniel Bernoulli, and Joseph-Louis Lagrange.[5][6][7][8] In 1746, d’Alembert discovered the one-dimensional wave equation, and within ten years Euler discovered the three-dimensional wave equation.[9]

The Euler–Lagrange equation was developed in the 1750s by Euler and Lagrange in connection with their studies of the tautochrone problem. This is the problem of determining a curve on which a weighted particle will fall to a fixed point in a fixed amount of time, independent of the starting point. Lagrange solved this problem in 1755 and sent the solution to Euler. Both further developed Lagrange's method and applied it to mechanics, which led to the formulation of Lagrangian mechanics.

In 1822, Fourier published his work on heat flow in Théorie analytique de la chaleur (The Analytic Theory of Heat),[10] in which he based his reasoning on Newton's law of cooling, namely, that the flow of heat between two adjacent molecules is proportional to the extremely small difference of their temperatures. Contained in this book was Fourier's proposal of his heat equation for conductive diffusion of heat. This partial differential equation is now a common part of mathematical physics curriculum.

Example

In classical mechanics, the motion of a body is described by its position and velocity as the time value varies. Newton's laws allow these variables to be expressed dynamically (given the position, velocity, acceleration and various forces acting on the body) as a differential equation for the unknown position of the body as a function of time.

In some cases, this differential equation (called an equation of motion) may be solved explicitly.

An example of modeling a real-world problem using differential equations is the determination of the velocity of a ball falling through the air, considering only gravity and air resistance. The ball's acceleration towards the ground is the acceleration due to gravity minus the deceleration due to air resistance. Gravity is considered constant, and air resistance may be modeled as proportional to the ball's velocity. This means that the ball's acceleration, which is a derivative of its velocity, depends on the velocity (and the velocity depends on time). Finding the velocity as a function of time involves solving a differential equation and verifying its validity.

Types

Differential equations can be divided into several types. Apart from describing the properties of the equation itself, these classes of differential equations can help inform the choice of approach to a solution. Commonly used distinctions include whether the equation is ordinary or partial, linear or non-linear, and homogeneous or heterogeneous. This list is far from exhaustive; there are many other properties and subclasses of differential equations which can be very useful in specific contexts.Electronic analog computers

Electronic analog computers

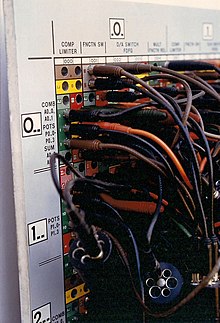

Electronic analog computers typically have front panels with numerous jacks (single-contact sockets) that permit patch cords (flexible wires with plugs at both ends) to create the interconnections that define the problem setup. In addition, there are precision high-resolution potentiometers (variable resistors) for setting up (and, when needed, varying) scale factors. In addition, there is usually a zero-center analog pointer-type meter for modest-accuracy voltage measurement. Stable, accurate voltage sources provide known magnitudes.

Typical electronic analog computers contain anywhere from a few to a hundred or more operational amplifiers ("op amps"), named because they perform mathematical operations. Op amps are a particular type of feedback amplifier with very high gain and stable input (low and stable offset). They are always used with precision feedback components that, in operation, all but cancel out the currents arriving from input components. The majority of op amps in a representative setup are summing amplifiers, which add and subtract analog voltages, providing the result at their output jacks. As well, op amps with capacitor feedback are usually included in a setup; they integrate the sum of their inputs with respect to time.

Integrating with respect to another variable is the nearly exclusive province of mechanical analog integrators; it is almost never done in electronic analog computers. However, given that a problem solution does not change with time, time can serve as one of the variables.

Other computing elements include analog multipliers, nonlinear function generators, and analog comparators.

Electrical elements such as inductors and capacitors used in electrical analog computers had to be carefully manufactured to reduce non-ideal effects. For example, in the construction of AC power network analyzers, one motive for using higher frequencies for the calculator (instead of the actual power frequency) was that higher-quality inductors could be more easily made. Many general-purpose analog computers avoided the use of inductors entirely, re-casting the problem in a form that could be solved using only resistive and capacitive elements, since high-quality capacitors are relatively easy to make.

The use of electrical properties in analog computers means that calculations are normally performed in real time (or faster), at a speed determined mostly by the frequency response of the operational amplifiers and other computing elements. In the history of electronic analog computers, there were some special high-speed types.

Nonlinear functions and calculations can be constructed to a limited precision (three or four digits) by designing function generators—special circuits of various combinations of resistors and diodes to provide the nonlinearity. Typically, as the input voltage increases, progressively more diodes conduct.

When compensated for temperature, the forward voltage drop of a transistor's base-emitter junction can provide a usably accurate logarithmic or exponential function. Op amps scale the output voltage so that it is usable with the rest of the computer.

Any physical process that models some computation can be interpreted as an analog computer. Some examples, invented for the purpose of illustrating the concept of analog computation, include using a bundle of spaghetti as a model of sorting numbers; a board, a set of nails, and a rubber band as a model of finding the convex hull of a set of points; and strings tied together as a model of finding the shortest path in a network. These are all described in Dewdney (1984).

Components

This section does not cite any sources. Please help improve this section by adding citations to reliable sources. Unsourced material may be challenged and removed. (March 2013) (Learn how and when to remove this template message) |

Analog computers often have a complicated framework, but they have, at their core, a set of key components that perform the calculations. The operator manipulates these through the computer's framework.

Key hydraulic components might include pipes, valves and containers.

Key mechanical components might include rotating shafts for carrying data within the computer, miter gear differentials, disc/ball/roller integrators, cams (2-D and 3-D), mechanical resolvers and multipliers, and torque servos.

Key electrical/electronic components might include:

- precision resistors and capacitors

- operational amplifiers

- multipliers

- potentiometers

- fixed-function generators

The core mathematical operations used in an electric analog computer are:

- addition

- integration with respect to time

- inversion

- multiplication

- exponentiation

- logarithm

- division

In some analog computer designs, multiplication is much preferred to division. Division is carried out with a multiplier in the feedback path of an Operational Amplifier.

Differentiation with respect to time is not frequently used, and in practice is avoided by redefining the problem when possible. It corresponds in the frequency domain to a high-pass filter, which means that high-frequency noise is amplified; differentiation also risks instability.

Limitations

This section does not cite any sources. Please help improve this section by adding citations to reliable sources. Unsourced material may be challenged and removed. (April 2012) (Learn how and when to remove this template message) |

In general, analog computers are limited by non-ideal effects. An analog signal is composed of four basic components: DC and AC magnitudes, frequency, and phase. The real limits of range on these characteristics limit analog computers. Some of these limits include the operational amplifier offset, finite gain, and frequency response, noise floor, non-linearities, temperature coefficient, and parasitic effects within semiconductor devices. For commercially available electronic components, ranges of these aspects of input and output signals are always figures of merit.

Decline

In the 1950s to 1970s, digital computers based on first vacuum tubes, transistors, integrated circuits and then micro-processors became more economical and precise. This led digital computers to largely replace analog computers. Even so, some research in analog computation is still being done. A few universities still use analog computers to teach control system theory. The American company Comdyna manufactured small analog computers.[40] At Indiana University Bloomington, Jonathan Mills has developed the Extended Analog Computer based on sampling voltages in a foam sheet.[41] At the Harvard Robotics Laboratory,[42] analog computation is a research topic. Lyric Semiconductor's error correction circuits use analog probabilistic signals. Slide rules are still popular among aircraft personnel.[citation needed]

Resurgence

With the development of very-large-scale integration (VLSI) technology, Yannis Tsividis' group at Columbia University has been revisiting analog/hybrid computers design in standard CMOS process. Two VLSI chips have been developed, an 80th-order analog computer (250 nm) by Glenn Cowan[43] in 2005[44] and a 4th-order hybrid computer (65 nm) developed by Ning Guo in 2015,[45] both targeting at energy-efficient ODE/PDE applications. Glenn's chip contains 16 macros, in which there are 25 analog computing blocks, namely integrators, multipliers, fanouts, few nonlinear blocks. Ning's chip contains one macro block, in which there are 26 computing blocks including integrators, multipliers, fanouts, ADCs, SRAMs and DACs. Arbitrary nonlinear function generation is made possible by the ADC+SRAM+DAC chain, where the SRAM block stores the nonlinear function data. The experiments from the related publications revealed that VLSI analog/hybrid computers demonstrated about 1–2 orders magnitude of advantage in both solution time and energy while achieving accuracy within 5%, which points to the promise of using analog/hybrid computing techniques in the area of energy-efficient approximate computing.[citation needed] In 2016, a team of researchers developed a compiler to solve differential equations using analog circuits.[46]

Analog computers are also used in neuromorphic computing, and in 2021 a group of researchers have shown that a specific type of artificial neural network called a spiking neural network was able to work with analog neuromorphic computers.[47]

Practical examples

These are examples of analog computers that have been constructed or practically used:

- Boeing B-29 Superfortress Central Fire Control System

- Deltar

- E6B flight computer

- Kerrison Predictor

- Leonardo Torres y Quevedo's Analogue Calculating Machines based on "fusee sans fin"

- Librascope, aircraft weight and balance computer

- Mechanical computer

- Mechanical watch

- Mechanical integrators, for example, the planimeter

- Nomogram

- Norden bombsight

- Rangekeeper, and related fire control computers

- Scanimate

- Torpedo Data Computer

- Torquetum

- Water integrator

- MONIAC, economic modelling

- Ishiguro Storm Surge Computer

Analog (audio) synthesizers can also be viewed as a form of analog computer, and their technology was originally based in part on electronic analog computer technology. The ARP 2600's Ring Modulator was actually a moderate-accuracy analog multiplier.

The Simulation Council (or Simulations Council) was an association of analog computer users in US. It is now known as The Society for Modeling and Simulation International. The Simulation Council newsletters from 1952 to 1963 are available online and show the concerns and technologies at the time, and the common use of analog computers for missilry.[48]

Technician work

🌟 Join Americas Technician Services and be part of our esteemed team of Field Technicians in the IT industry! 🌟 At ATS, we specialize in...

-

Dell G15 5535 Gaming Laptop 15.6" G5535 16 GB DDR5 RTX 4060 Ryzen 7 Brand Dell Type Notebook/Laptop Processor AMD Ryzen 7-7840HS Proc...

-

Take it to the limit with a new sex toy from Adam & Eve! They’ve got the best adult toys all for 50% OFF on almost any single item, Fre...

-

Shop for CBD oil, e-liquid, tinctures, capsules, gummies, skincare products, and enjoy in natural benefits of CBD. Don't forget to use...

![{\displaystyle {\begin{aligned}{\frac {dy}{dx}}&=f(x)\\[4pt]{\frac {dy}{dx}}&=f(x,y)\\[4pt]x_{1}{\frac {\partial y}{\partial x_{1}}}&+x_{2}{\frac {\partial y}{\partial x_{2}}}=y\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d17bdcadcae4f4602463dba2e7ad439b31c3fa5b)